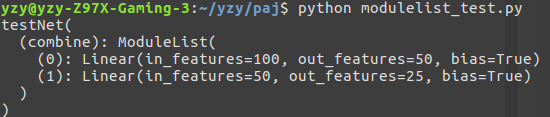

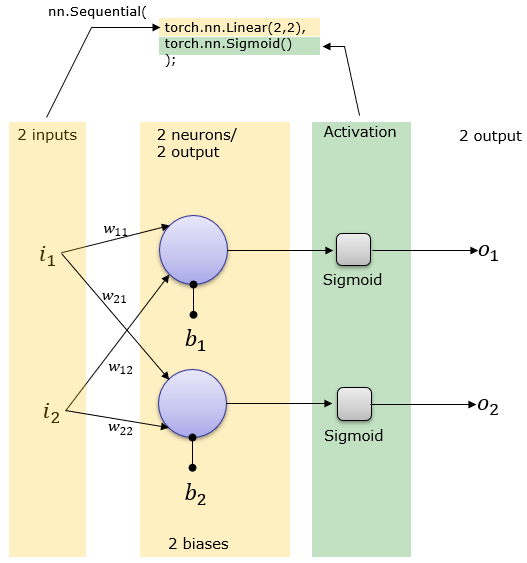

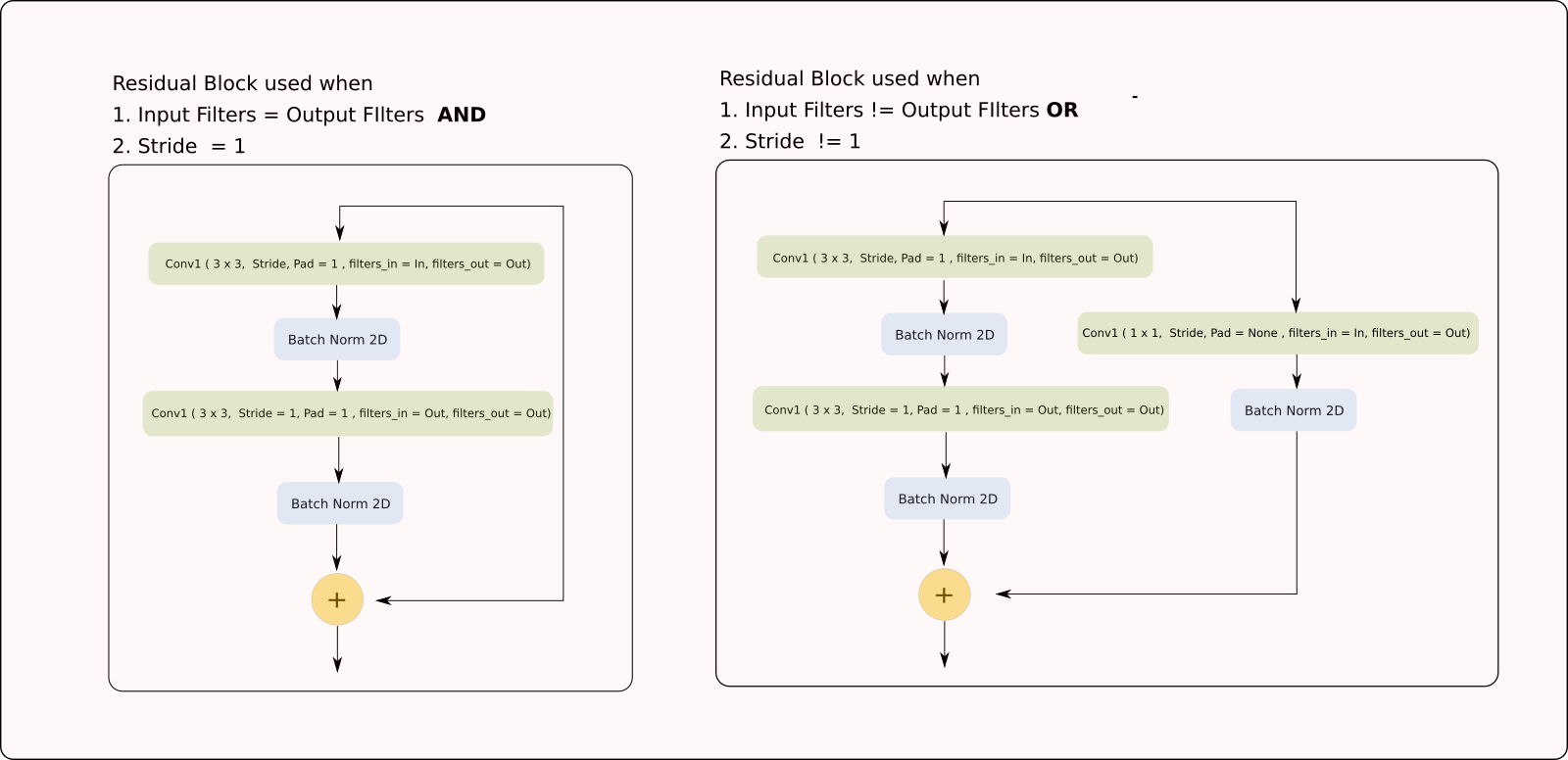

(4): MaxPool2d(kernel_size=(2, 2), stride=2, padding=0, dilation=1, ceil_mode=False) (2): MaxPool2d(kernel_size=(2, 2), stride=2, padding=0, dilation=1, ceil_mode=False) Image taken from this datasciencecentral article. The two types of VGG Blocks: the two-layer(blue, orange) and the three-layer ones(purple, green, red). VGG has two types of blocks, defined in this paper.įigure 1. VGG Networks were one of the first deep CNNs ever built! Let’s try to recreate it. Phew! This saved us from defining these 9 individual layers one by one. We can actually see that we have these repeating blocks. (1): BatchNorm1d(1, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (0): Linear(in_features=5, out_features=1, bias=True) (1): BatchNorm1d(5, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (0): Linear(in_features=4, out_features=5, bias=True) (1): BatchNorm1d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True) (0): Linear(in_features=10, out_features=4, bias=True) Glad that it works for your case.Enter fullscreen mode Exit fullscreen mode The names are conveniently returned as expected when using Predictive, e.g. Then, I can just reference model.parameter_names to get those names later. Setattr(m, name, PyroSample(prior=dist.Laplace(0., 3.) Pyro.nn.module.to_pyro_module_(self.shape) ('shape_fc1L:final', nn.Linear(in_features=h1,out_features=out_features))įor name, param in _parameters(): ('shape_fc0', nn.Linear(in_features=in_features,out_features=h1)), Self.mu_func_call = self.mu_func(in_features, h1 = h1, out_features = out_features)

Using idea from here from collections import OrderedDict I guess you can do theta = nn.Sequential(

#PYTORCH NN SEQUENTIAL CODE#

Your code def _init_(self, in_features, h1 = 2, out_features = 1): I would recommend playing a bit with some PyTorch modules like Sequential to see how naming works in PyTorch. If your nn.Module has two submodules mu and theta, then the name of parameters will be "mu.linear.weight". It is just the same as the way you use m.named_parameters(recurse=False) in your code. With a = A(), I think you can do a.named_parameters() to get names of parameters of theta.

In general though, is there a way to get all possible options to use in return_sites? I looked at poutine but could not get that to work.ĭef _init_(self, in_features, h1 = 2, out_features = 1):

#PYTORCH NN SEQUENTIAL HOW TO#

But I can’t figure out how theta gets named and how to access that distribution. With mu for example, if I use self.linear = PyroModule(.), I can use Predictive(model, guide, num_samples, return_sites = ("linear.weight")). How do the sites get named for theta? I’d like to look at the distributions of those parameters using Predictive. Obs = pyro.sample("obs", GammaHurdle(concentration = shape, rate = shape / mu, theta = theta), obs=y) Setattr(m, name, PyroSample(prior=dist.Laplace(0, 2) Pyro.nn.module.to_pyro_module_(self.theta)įor name, value in list(m.named_parameters(recurse=False)): Relevant code snippet (some lines removed to make it more clear): def _init_(self, in_features, h1 = 2, out_features = 1): I’ve now updated theta to be modeled as a two layer nn.Sequential. I’m continuing on the model I’ve described here, adding complexity bit by bit.

0 kommentar(er)

0 kommentar(er)